Clinical Trial Eligibility Checker

How Likely Are You to Qualify for a Clinical Trial?

Clinical trials often exclude 80% of potential patients. This tool estimates your eligibility based on common exclusion factors from real-world data.

Eligibility Estimate

What clinical trials tell you - and what they don’t

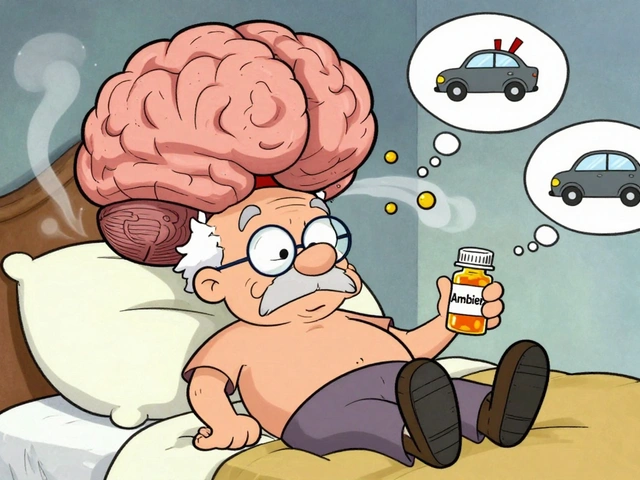

When a new drug hits the market, you hear about its success based on clinical trial results: "90% effective," "significantly better than placebo." But here’s the catch: the people in those trials aren’t most patients. Clinical trials are tightly controlled. Participants are carefully selected - younger, healthier, with fewer other conditions. They get regular check-ins, strict medication schedules, and constant monitoring. In fact, 80% of people who might benefit from a treatment are excluded from these studies because they have diabetes, heart disease, are over 75, or simply can’t make it to the clinic every few weeks.

This isn’t a flaw - it’s by design. Randomized controlled trials (RCTs) were created to answer one clear question: Does this treatment work under ideal conditions? The answer matters. It’s how regulators like the FDA and EMA decide if a drug can be sold. But that’s not the same as asking: Does it work for the person sitting across from their doctor?

Real-world outcomes show what actually happens

Real-world evidence (RWE) looks at what happens when drugs are used in everyday life. Think of it as watching how a car performs on city streets, not just on a test track. This data comes from electronic health records, insurance claims, wearable devices, and patient registries. It includes people with multiple chronic illnesses, older adults, different ethnic groups, and those who miss doses or can’t afford co-pays.

A 2024 study in Scientific Reports compared data from 5,734 diabetic kidney disease patients in clinical trials with 23,523 from real-world records. The results were stark. Real-world patients had more complex health issues, less consistent data collection, and far more variation in how treatments were followed. While clinical trials reported 92% completeness for key outcomes, real-world data only hit 68%. That gap isn’t noise - it’s reality.

Why the gap matters for patients

That difference isn’t just statistical. It changes lives. A 2023 study in the New England Journal of Medicine found that only 20% of cancer patients in academic centers met the strict criteria for clinical trials. Black patients were 30% more likely to be excluded - not because their disease was worse, but because of barriers like transportation, work schedules, or distrust in the system. When trial results say a drug works well, but most patients never got to take it under those conditions, the promise doesn’t match the practice.

Real-world data shows that drugs often work less effectively outside the trial setting. Some patients experience more side effects. Others don’t respond at all. A drug that shrinks tumors in a trial might only stabilize them in real life. That doesn’t mean the drug failed - it means we need better context.

How real-world data is changing drug development

Real-world evidence isn’t just a post-market afterthought anymore. Companies are using it to design better trials. ObvioHealth found that by selecting patients with histories of sticking to treatment plans - based on past EHR data - they could reduce trial sizes by 15-25% without losing accuracy. That’s huge. Smaller trials mean faster approvals, lower costs, and quicker access for patients.

Regulators are catching on. The FDA approved 17 drugs between 2019 and 2022 using real-world data as part of the evidence - up from just one in 2015. The EMA is even further along, using RWE in 42% of post-approval safety studies in 2022. Payers like UnitedHealthcare and Cigna now require real-world proof of cost-effectiveness before covering new drugs. If a drug costs $200,000 a year, insurers want to know: Does it actually keep people out of the hospital? Does it improve quality of life? Trial data alone can’t answer that.

The hidden costs and challenges of real-world data

But real-world data isn’t magic. It’s messy. Health records are scattered across 900+ hospital systems in the U.S., each using different software. Data is incomplete. Missing lab results, inconsistent coding, patients switching providers - all of it creates gaps. And because these records weren’t designed for research, biases creep in. A patient who sees a specialist regularly might look healthier than one who only goes to the ER. That’s selection bias. Without careful statistical tools like propensity score matching, you can’t tell if a treatment worked - or if the patient was just healthier to begin with.

Only 35% of healthcare organizations have dedicated teams to handle this kind of analysis. And even when they do, merging real-world data with clinical trial data fails 63% of the time, according to Nature Communications, because the data structures don’t line up. It’s like trying to combine two different languages without a dictionary.

Regulators are trying to fix the system

The FDA’s 2023 Real-World Evidence Framework now requires companies to submit detailed data quality reports before using RWE in submissions. The 2022 VALID Health Data Act, passed by the U.S. Senate HELP Committee, aims to create national standards for collecting and sharing health data - a direct response to the 2019 Nature study showing only 39% of RWE studies could be replicated.

The NIH’s HEAL Initiative, backed by $1.5 billion, is using real-world data to find alternatives to opioids for chronic pain. Flatiron Health’s oncology database - built over five years and costing $175 million - now tracks 2.5 million cancer patients across 280 clinics. Roche bought it for $1.9 billion because it works. The infrastructure is expensive, but the payoff is real.

The future isn’t RCTs vs. RWE - it’s RCTs and RWE

Some critics, like Stanford’s Dr. John Ioannidis, warn that real-world enthusiasm has outpaced science. There have been cases where RWE showed a drug was harmful, while RCTs said it was safe - and vice versa. That’s not because one is wrong. It’s because they answer different questions.

The smartest approach now is hybrid trials. The FDA’s 2024 draft guidance encourages designs that start with a controlled trial to prove safety and efficacy, then follow up with real-world monitoring to see how it performs in diverse populations. AI is helping too. Google Health’s 2023 study showed algorithms could predict treatment outcomes from EHR data with 82% accuracy - better than traditional RCT analysis in some cases.

Real-world outcomes don’t replace clinical trials. They complete them. Trials tell you if a drug can work. Real-world data tells you if it will work for you.

What this means for you

If you’re a patient, don’t assume a drug that worked in a trial will work exactly the same for you. Ask your doctor: "Was this tested on people like me?" If you’re a caregiver, understand that side effects or lack of response might not mean the treatment failed - it might just mean the trial didn’t reflect your reality.

If you’re in healthcare or policy, the message is clear: stop treating clinical trials and real-world data as rivals. They’re partners. One sets the standard. The other tells you if the standard holds up in the real world.